However, if you request a dynamic web site in your Python manuscript, then you won't obtain the HTML web page content. It can be testing to wrap your head around a long block of HTML code. To make it much easier to review, you can make use of an HTML formatter to cleanse it up instantly. Excellent readability aids you better comprehend the framework of any kind of code block.

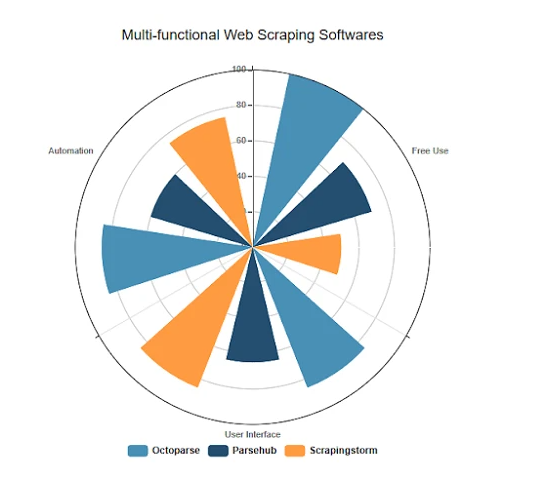

Internet Scraper provides full JavaScript execution, awaiting Ajax demands, pagination trainers, ETL data integration solution and web page scroll down. Cheerio does not-- analyze Enhance Data Warehousing with ETL the result as an internet browser, generate an aesthetic rendering, apply CSS, load outside sources, or carry out JavaScript; that's why it's so fast. Like Puppeteer, Dramatist is also an open-source library that any individual can use free. Playwright supplies cross-browser assistance-- it can drive Chromium, WebKit, and Firefox. Octoparse provides cloud services and IP Proxy Servers to bypass Streamlined Data Extraction ReCaptcha as well as obstructing. Web Unblocker allows you extend your sessions with the very same proxy to make several requests.

Start The Autoscraper

Web scraping has ended up being necessary for people and services to extract valuable insights from on-line resources. There are many methods and devices available for data collection. Each web scratching technique has its toughness as well as limitations. For that reason, picking a web scuffing technique that is suitable for your information collection project is testing. Especially the last is usually essential, to avoid being obstructed while accessing a site. Simply put, a web scrape is a tool for extracting information from one or more internet sites; on the other hand, a crawler finds or uncovers URLs or links on the web.

A Look Inside OpenAI's Web Crawler and the Continuous Missteps ... - hackernoon.com

A Look Inside OpenAI's Web Crawler and the Continuous Missteps ....

Posted: Fri, 18 Aug 2023 23:02:18 GMT [source]

The terms are sometimes utilized reciprocally, as well as both handle the process of drawing out details. There are as numerous answers as there are website online, and extra. This details can be a terrific resource to construct applications about, as well as expertise of composing such code can additionally be utilized for automated internet screening.

Beautiful Soup: Develop A Web Scrape With Python

While some customers could prefer a web spider made to work with Mac OS, others might like a scraping device that works well with Windows. As the majority of whatever is attached to the Net nowadays, you will possibly discover a collection for making HTTP requests in any type of programming language. At the same time, making use of web browsers, such as Firefox and Chrome, is slower.

- In conclusion, automated Google Sheet internet scuffing can save you a great deal of time and effort when contrasted to manual web scuffing.

- Having actually functioned numerous jobs in the technology market, she specifically delights in finding ways to reveal facility ideas in straightforward ways with content.

- You can increase performance using the time saved by accumulating the huge information collections web scratching offers to do other jobs.

- Basically, an internet scraper is a tool for removing information from several sites; meanwhile, a crawler finds or uncovers URLs or links on the internet.

Unlike in the do it yourself workflow, with RPA, you don't need to create code whenever you collect brand-new information from new sources. The RPA platforms normally offer integrated devices for internet scratching, which conserves time and also is much easier to use. Sites typically add brand-new attributes and apply architectural changes, which bring scratching devices to a halt. This takes place when the software is created relative to the site code components. One can create a couple of lines of code in Python to finish a large scuffing job. Also, since Python is among the popular programs languages, the neighborhood is extremely active.